Welcome!

I’m currently a software/research engineer based in Seattle, Washington.

Some of my work has been featured in outlets like TechCrunch, Fast Company, and BBC.

Read on for details on some projects I’ve worked on.

Projects

Iconary: A Pictionary-Based Game for Testing Multimodal Communication with Drawings and Text(2021)

![]()

Conducted experiments to investigate the efficacy of finetuning pretrained language models for cooperative gameplay in a vision-language based game.

Paper Abstract: Communicating with humans is challenging for AIs because it requires a shared understanding of the world, complex semantics (e.g., metaphors or analogies), and at times multi-modal gestures (e.g., pointing with a finger, or an arrow in a diagram). We investigate these challenges in the context of Iconary, a collaborative game of drawing and guessing based on Pictionary, that poses a novel challenge for the research community. In Iconary, a Guesser tries to identify a phrase that a Drawer is drawing by composing icons, and the Drawer iteratively revises the drawing to help the Guesser in response. This back-and-forth often uses canonical scenes, visual metaphor, or icon compositions to express challenging words, making it an ideal test for mixing language and visual/symbolic communication in AI. We propose models to play Iconary and train them on over 55,000 games between human players. Our models are skillful players and are able to employ world knowledge in language models to play with words unseen during training.

| Game | Publication | Code |

|---|---|---|

| Play Game Here | EMNLP 2021 Paper | Github |

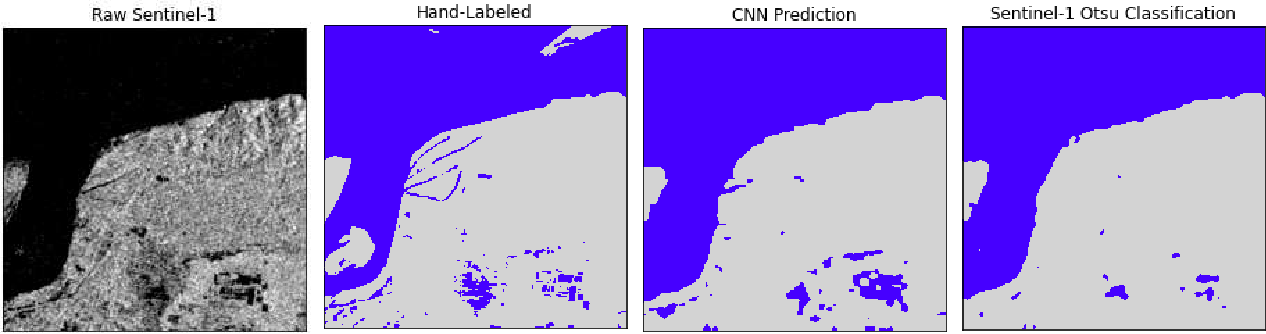

Sen1Floods11: a georeferenced dataset to train and test deep learning flood algorithms for Sentinel-1(2020)

Worked with remote sensing experts at Floodbase(formerly Cloud to Street) to design and implement a dataset to train deep learning computer vision models to predict flooded area from satellite imagery. Trained and evaluated flood segmentation models with this data.

Paper Abstract: Accurate flood mapping at global scale can support disaster relief and recovery efforts. Improving flood relief efforts with more accurate data is of great importance due to expected increases in the frequency and magnitude of flood events due to climate change. To assist efforts to operationalize deep learning algorithms for flood mapping at global scale, we introduce Sen1Floods11, a surface water data set including raw Sentinel-1 imagery and classified permanent water and flood water. This dataset consists of 4,831 512x512 chips covering 120,406 km2 and spans all 14 biomes, 357 ecoregions, and 6 continents of the world across 11 flood events. We used Sen1Floods11 to train, validate, and test fully convolutional neural networks (FCNNs) to segment permanent and flood water. We compare results of classifying permanent, flood, and total surface water from training a FCNN model on four subsets of this data: i) 446 hand labeled chips of surface water from flood events; ii) 814 chips of publicly available permanent water data labels from Landsat (JRC surface water dataset); iii) 4,385 chips of surface water classified from Sentinel-2 images from flood events and iv) 4,385 chips of surface water classified from Sentinel-1 imagery from flood events. We compare these four models to a common remote sensing approach of thresholding radar backscatter to identify surface water. Results show the FCNN model trained on classifications of Sentinel-2 flood events performs best to identify flood and total surface water, while backscatter thresholding yielded the best result to identify permanent water classes only. Our results suggest deep learning models for flood detection of radar data can outperform threshold based remote sensing algorithms, and perform better with training labels that include flood water specifically, not just permanent surface water. We also find that FCNN models trained on plentiful automatically generated labels from optical remote sensing algorithms perform better than models trained on scarce hand labeled data. Future research to operationalize computer vision approaches to mapping flood and surface water could build new models from Sen1Floods11 and expand this dataset to include additional sensors and flood events. We provide Sen1Floods11, as well as our training and evaluation code at: https://github.com/cloudtostreet/Sen1Floods11 .

| Publication |

|---|

| Sen1Floods11 |

World’s Most Accurate Population Density Maps(HRSL)(2018-2019)

The High Resolution Settlement Layer(HRSL) is the world’s most accurate population density map. Working as an engineer on the World.AI team at Facebook, I found a novel combination of semi-supervised and weakly supervised learning that vastly improved the accuracy of the world’s most accurate population density maps. This work was published in CVPR 2019’s Computer Vision for Global Challenges Workshop and explained in detail in a pair of blog posts published on Facebook’s AI and Tech blogs. This work involved automatically scaling a training data set from around 1 million images to 100’s of millions of images and improving the accuracy of the final model by more than 12%. This work also automated the process of getting training data for new countries, allowing the team to begin releasing population maps for more countries in a few months than it had in all of the proceeding years of the project. This work is being used today by organizations like the Red Cross and The Bill and Melinda Gates Foundation to save lives. This work is also a core part of Facebook’s Disease Maps. More info about the impact of this work is available on Facebook’s Data For Good website. You can also read about the work in Fast Company, TechCrunch, MIT Technology Review, and many other outlets.

| Blogs | Publication | Press |

|---|---|---|

| AI, Tech | CVPR 2019 CV4GC | Fast Company, TechCrunch, MIT Tech Review |

Map With AI(2018-2019)

Map With AI is a suite of tools from Facebook to enable faster and more accurate mapping for the open mapping community. Working as an engineer on the World.AI team at Facebook, I developed a weakly supervised training regime to improve the accuracy and generalizability of the model that powers the Map With AI suite of tools. Because of this work, we were able to produce accurate results for countries on 6 continents around the world. Prior to the development of this method, results could only be accurately and reliably produced for Indonesia and Thailand—two countries on the same continent. On a sampling of test countries this training regime led to an average relative accuracy improvement of 62%. This work is detailed in a Facebook AI Blog post and was published in CVPR 2019’s Computer Vision for Global Challenges Workshop. More info on the Map With AI suite of tools can be found on the Facebook Tech Blog. You can read about the project in outlets like BBC, and TechCrunch.

| Blogs | Publication | Press |

|---|---|---|

| AI, Tech | CVPR 2019 CV4GC | BBC, TechCrunch |

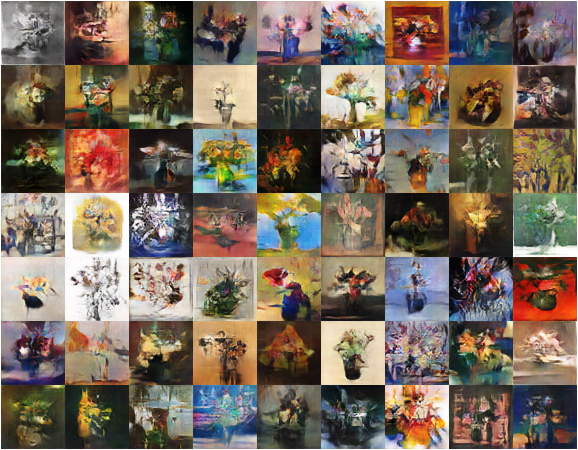

GANGogh(2017)

Explored the use of Generative Adversarial Networks for the generation of novel art. We were able to generate some of the earliest GAN based art. Our approach relied on the improved stability of the Wasserstein metric to employ global conditioning in an AC-GAN framework to condition the art generation on genres such as flowers or faces. My collaborator, Kenny, wrote up a blog post about the work. The work also was featured in TechCrunch.

| Blog | Press |

|---|---|

| Medium | TechCrunch |